21 Recap

Time flies and we have already reached the half of this module, so it is time to reflect on what we have done so far:

21.1 Workflow

Our labs have focused on data analysis. The goal here is to try and understand informative patterns in our data. These patterns allow us to answer questions.

To do this we:

- Read data into Python.

- Look at our data.

- Wrangling our data. Often exploring raw data and dealing with missing values (imputation techniques), transformaing, normalising, standardising, outliers or reshaping.

- Carry out a series of analysis to better understand our data via clustering, regressions analysis, dimension reduction, and many other techniques.

- Reflect on what the patterns in our data can tell us.

These are not mutually exclusive processes and are not exhaustive. One may review our data after cleaning, load in more data, carry out additional analysis and/or fit multiple models, tweak data summaries or adopt new techniques. Reflecting on the patterns are in our data can give way to additional analysis and processing.

21.2 So far

A quick reminder, our toolkit comprises of:

- Pandas - table like data structures. Packed with methods for summarising and manipulating data. Documentation. Cheat sheet.

- Seaborn - helping us create statistical data visualisations. Tutorials.

- Scikit-learn - An accessible collection of functions and object for analysing data. These analysis include dimension reduction, clustering, regressions and evaluating our models. Examples.

These tools comprise some of the core Python data science stack and allow us to tackle many of the elements from each week.

21.2.1 Week 2

Tidy data, data types, wrangling data, imputation (missing data), transformations.

21.2.2 Week 3

Descriptive statistics, distributions, models (e.g., regression).

21.2.3 Week 4

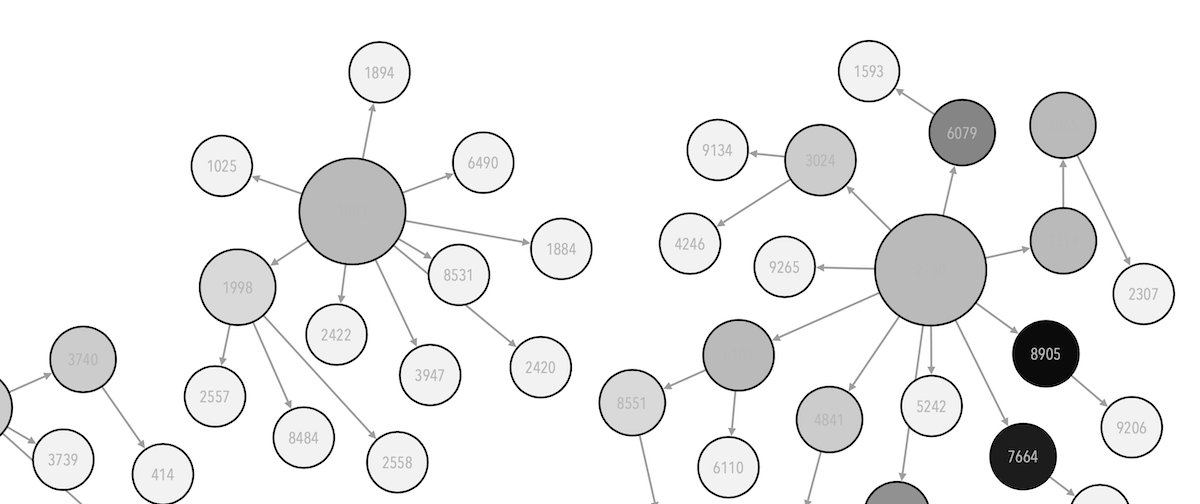

Feature selection, dimension reduction (e.g., Principle Component Analysis, Multidimensional scaling, Linear Discriminant Analysis, t-SNE, Correspondance Analysis), clustering (e.g., Hierarchical Clustering, Partioning-based clustering such as K-means).

We have also encountered two dataset sources.

You can learn a lot by picking a dataset, choosing a possible research question and carrying a series of analysis. I encourage you to do so outside of the session. It certainly forces one to read the documentation and explore the wonderful possabilities.

21.3 This week

Trying to understand patterns in data often requires us to fit multiple models. We need to consider how well a given model (a kmeans cluster, a linear regression, dimension reduction, etc.) performs.

Specifically, we will look at:

- Comparing clusters to the ‘ground truth’ - the wine dataset

- Cross validation of linear regression - the crime dataset

- Investigating multidimensional scaling - the london borough dataset

- Visualising the overlap in clustering results