15 Introduction

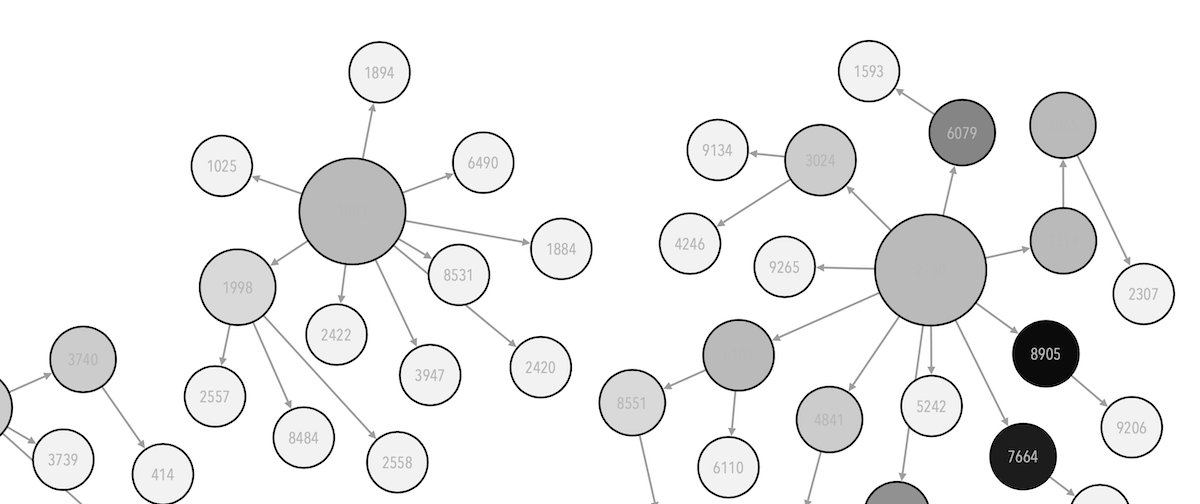

This week explores the notion of structures and how data science can enable the extraction of “hidden” underlying groups – clusters – and hierarchical structures from data. We discuss the different techniques to surface and generate artificial boundaries and how the resulting artefacts can be interpreted. This session then investigates how artificial and abstract spaces can be constructed through different “projection” techniques, and how these spaces help us navigate data that are high-dimensional in nature and apply analytic frameworks to them.

The practical lab explores the use of clustering techniques, compares alternatives, and discusses interpretability issues, and we also review how we can deal with data sets that consists of several variables.

15.1 Highlights of the lecture

We start our discussions this week with the notion of spaces and how spaces we construct can help us in understanding complex phenomena. We then look into how spaces come into play when we explore high-dimensional data sets. We look into how a number of techniques help us construct artificial spaces – projections – that provide the basis for further operations. We then look into the topic of cluster analysis and will discuss a number of techniques that can help us explore “structures” in low and high dimensional data sets. We will also explore how projection spaces and the notions of distance come into play.

15.2 Practical Lab Session

In the practical session, we will explore a number of computational routines and tools that support dimension reduction and projection tasks. We will also look into cluster analysis and explore functions that can help us run cluster analysis routines. We will have a particular attention on the interpretation of these complex tools and will be working with multivariate data sets.

15.3 Reading lists & Resources

15.3.1 Required reading

- Very good overview on variable and feature selection (have a look at the first 4 sections and can read the rest for more advanced methods): Guyon, Isabelle, and André Elisseeff. “An introduction to variable and feature selection.” The Journal of Machine Learning Research 3 (2003): 1157-1182.

- A good overview on dimension reduction techniques, read the Section 1 on a general discussion of dimension reduction, Section 2 on PCA and appendix H on MDS for a technical explanation.

- On clustering methods : Tan, P., Steinbach, M. & Kumar, V., 1956 2006, Introduction to data mining, Pearson Addison Wesley, Boston, Mass; London. Chapter-7 is on cluster analysis and really a good introduction: https://www-users.cs.umn.edu/~kumar001/dmbook/ch7_clustering.pdf

15.3.2 Optional reading

- On using PCA in biotechnology, gives an overview introduction: Ringnér, Markus (2008). “What is principal component analysis?”. Nature biotechnology (1087-0156), 26 (3), p. 303.

- Rapkin, B.D. and Luke, D.A., 1993. Cluster analysis in community research: Epistemology and practice. American Journal of Community Psychology, 21(2), pp.247-277.[pdf - login through library account]

- A non-technical introduction to MDS : Jaworska, Natalia, and Angelina Chupetlovska-Anastasova. “A review of multidimensional scaling (MDS) and its utility in various psychological domains.” Tutorials in Quantitative Methods for Psychology 5.1 (2009): 1-10.

15.3.3 Further reading

- Galbraith, S., Daniel, J.A. and Vissel, B., 2010. A study of clustered data and approaches to its analysis. Journal of Neuroscience, 30(32), pp.10601-10608.

- Fonseca, J.R., 2013. Clustering in the field of social sciences: that is your choice. International Journal of Social Research Methodology, 16(5), pp.403-428. [pdf]

- A useful blog post on Feature Selection – https://machinelearningmastery.com/feature-selection-with-real-and-categorical-data/